Contents

- What is AI?

- AI is on the rise but should it be?

- AI In Everyday Life

- How does AI benefit our health?

- Conclusion

- References

What is AI?

AI: Stands for Artificial Intelligence

The term "artificial intelligence" is used to describe the ability of machines to learn and problem solve, to essentially mimic humans by using specialised algorithms [1]. AI is simply an umbrella term that covers all of its subsets. AI can describe many different systems from Machine Learning to Deep Learning. However, all its subsets and definitions share one common attribute. They all allow machines to mimic human intelligence.

AI is on the rise but should it be?

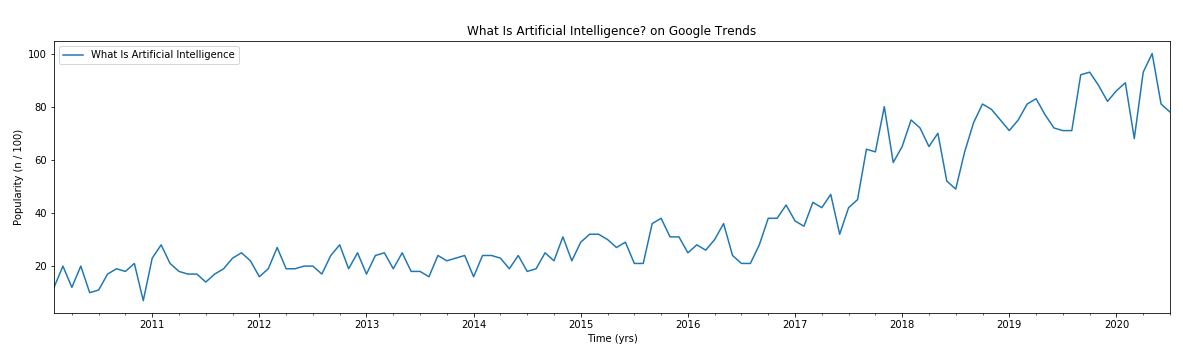

Using Google Trends API To Retrieve Secondary Data On Recent Public's Interest About AI and Its Usage

Disclaimer

All data presented is received from the Google Trends™ API (Application Programming Interface) and retrieved from their database, I do not own any data presented and I am simply using it for research purposes to demonstrate the public's interest in AI. As a disclaimer, I do not claim ownership of any data presented here and I am using it under the 'Fair Dealing' law governed by Sections 29 and 30 of the Copyright, Designs and Patents Act 1988, which outlines three instances where fair dealing is a legitimate defence:

- If the use is for the purposes of research or private study;

- If it is used for the purposes of criticism, review or quotation;

- Where it is utilised for the purposes of reporting current events (this does not apply to photographs)

In this case, I am using the data solely for research purposes to demonstrate, as stated earlier, the public's interest in AI.

Using Python's 3rd party modules to gather and display data

Using Google's API to gather data from the Google trends data analysis, and python 3 with modules 'pandas' and 'pytrends', I searched for specific key phrases and plotted them in the graphs below to show recent public interest in the subjects and to show, in recent years, how the public has come to view AI and its use in healthcare.

Representation:

Numbers represent search interest relative to the highest point on the chart for the given region and time. A value of 100 is the peak popularity for the term. A value of 50 means that the term is half as popular. A score of 0 means there was not enough data for this term. Again, all of this data was retrieved from Google™ and therefore abides by Google's chosen numerical scale.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

#Here we import the 3rd party modules required #pandas is used to manipulate data frames import pandas as pd #pytrends is used to connect to the Google API and retrieve data from pytrends.request import TrendReq #Then we name the TrendReq() function as a pytrends variable to use throughout the script pytrends = TrendReq() #Then create a function that, whenever called, will create a graph based on the question asked def PlotGraph(kw_list): keyword_list = [kw_list.title()] #This defines what variables I am looking for, e.g. "geo" asks where in the world you are looking and using an empty space "" gives the value "all", telling it we are looking worldwide pytrends.build_payload(keyword_list, cat=0, timeframe='2010-01-14 2020-07-20', geo='', gprop='') #This looks for the key words interest over time in the period I specified in the code before data = pytrends.interest_over_time() #.plot() plots the data graph = data.plot(title = kw_list.title() + '? on Google Trends',figsize=(20, 5)) #set.(x/y)label, labels the graph graph.set_xlabel("Time (yrs)") graph.set_ylabel("Popularity (n / 100)") #Here, I can now call the PlotGraph() function made earlier to plot a graph to show recent searches using the phrase "what is artificial intelligence" PlotGraph("what is artificial intelligence") |

(Hover to zoom)

This can also be done with a CSV File from Google Trends, using pandas on Jupyter Notebook:

1 2 3 4 5 6 7 8 9 10 11 12 |

#Imports pandas import pandas as pd #Makes a dataframe for the file df = pd.read_csv("What_Is_Artificial_Intelligence.csv") #prints only the first 5 data points df.head() #Plots the data with a suitable figure size and a title graph = df[['Week','What Is Artificial Intelligence?']].plot(figsize=(15,8), title = "What is Artificial Intelligence? On Google Trends") #Sets the x axis Label graph.set_xlabel("Time (yrs)") #Sets the y axis Label graph.set_ylabel("Popularity (n / 100)") |

To learn the basics of data analysis, go to the Open University's free course: Learn to code for data analysis

As shown in figure 1, in the last 5 years especially, there has been an exponential increase in the searches of what AI is and therefore how it functions. This data suggests that the public is becoming increasingly interested in AI technology which can lead to an increase in the production of AI designs as people of all ages find out and begin their own research into the subject as well as funding for the industry.

Now we plot the next graph:

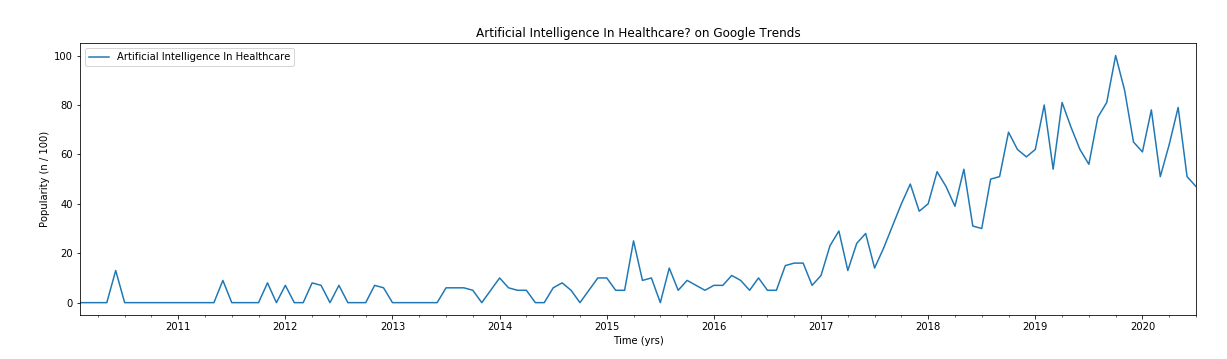

PlotGraph("artificial intelligence in healthcare")

(Hover to zoom)

Surprisingly, this data co-aligns with the data in figure 1, which shows public interest in what AI is. From 2017 onwards (figure 2 ), there is also an exponential increase in search results which could suggest that people who are learning about AI are also becoming more aware of it being used in healthcare. AI is used in all types of treatments, from radiotherapy to radiology and due to this, more people are researching AI, which can be a double edged sword, as this can be both beneficial and harmful. On one hand, there is reason to believe more people are becoming health literate in this age of technology and, with reason to assume, are providing themselves with better autonomous care by looking into treatments.

However, this could also backfire, as “Google” and most search engines are fundamentally biased due to implementing search algorithms that cater towards whichever site is most popular. This means that repeated searches can lead to a patient reading something that is completely wrong which may distort their judgement on a treatment by providing either false, non-specific, or not applicable information.

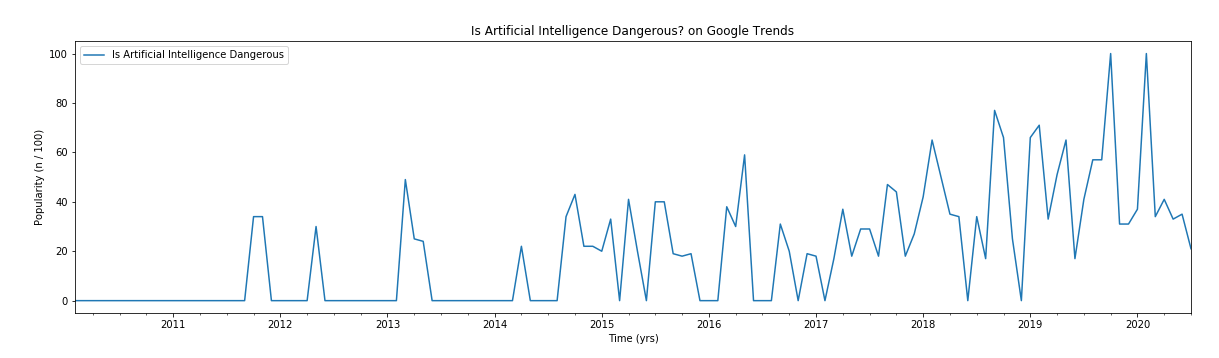

PlotGraph("is artificial intelligence dangerous")

(Hover to zoom)

Moreover, in figure 3, there are searches in recent years that ask the question “is AI dangerous?”. Suggesting that a number of people still do not necessarily trust AI as of yet and a big reason for this may be due to them not knowing what AI is. With figure 1 and 3, it tells us that there are a number of people that both don't know or understand what AI is and are scared of it. So while AI is on the rise, the untrustworthiness of it is also rising as bias search engines lead people to sites that are against AI as well as those that are pro-AI.

If a patient went into a consultation and found that AI or a robot was the best solution and was being used in their surgery and due to this they rejected the treatment, then both their quality and quantity of life is at risk. This is hazardous to public health and should be addressed. Computing and technology are becoming increasingly vital in this day and age and therefore should be taught in more detail to the general public to reduce the risk of situations like this occurring.

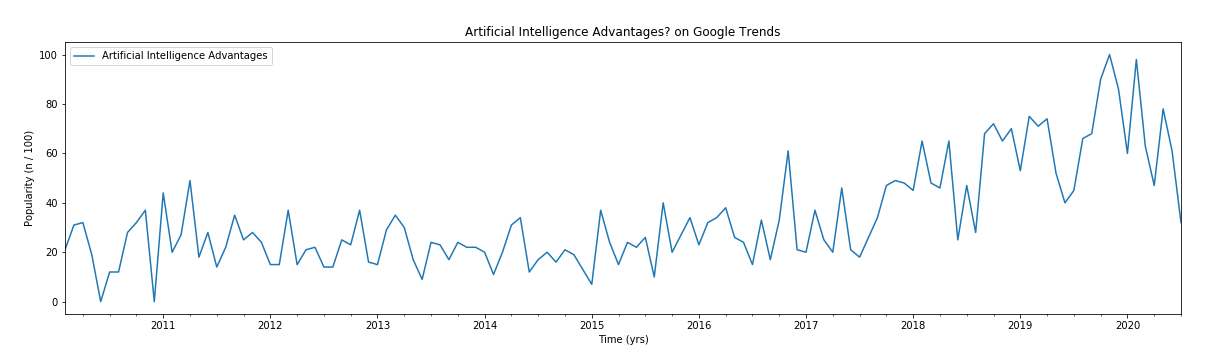

PlotGraph("artificial intelligence advantages")

(Hover to zoom)

Fortunately, in figure 4, there is a much larger increase in search results for the advantages of AI than the dangers of it. Showing that, while AI is on the rise, it’s not all bad, as there are people out there researching the benefits of AI and how it can potentially help them.

This data demonstrates that while AI and AI technology is on the rise, It's not as dangerous as it seems, as there are people out there trying to understand it and with understanding comes acceptance and growth. Which can lead to more advancements in AI technology and its subsets due to the amount of interest and further funding it may likely get in the future due to this.

AI In Everyday Life

AI, and its subset Machine Learning, has a large variety of applications in daily life. One famous example is the self driving car. With companies like Tesla™ at the forefront of it all, AI and machine learning are being used and perfected everyday to provide safer cars and cause fewer accidents on the road. With self-driving cars, all cars could communicate with each other and therefore know where each vehicle was going, which could drastically reduce the risk of car accidents. They can calculate operations millions of times faster than the human brain [2] can and as a result, reduce the risk of pedestrian and bicycle accidents.

Another example is robots, you may not expect it but even machines in your house use AI. The iRobot Roomba 980™ model vacuum uses AI technology [3] to scan a room's area size and look for the most efficient path to clean as well as avoid obstacles on the way. It can also assess the amount of cleaning it's required to do by the size of the room. Larger rooms require less cleaning cycles while smaller ones, which get dirtier faster, get more. This is just one of the ways AI is being used in everyday life and Its ability to problem solve is continuously evolving.

How does AI benefit our health?

This picture above is an example of how AI can be used to better modern medicine. The computer software automatically calculated the bone age and therefore the age of the patient. This provides a fast and easy way to gauge a person's age who may not know it themselves, e.g. a patient with amnesia.

AI is used everywhere, especially in radiology and surgery. AI’s ability to interpret imaging from radiology can aid health care professionals in identifying even the most minuscule change in a scan that may have been missed previously.

AI used in detection of COVID - 19

AI can even be used to differentiate COVID - 19 from pneumonia. In a paper done by the Department of Radiology in Wuhan, China [4], AI was used to create a fully automatic framework to detect COVID - 19 using chest CT scans to distinguish it from pneumonia, which has similar symptoms to COVID - 19. It used deep learning, a subset of AI, to create an artificial neural network that used data sets of CT scans of patients with COVID - 19 and ones with just community acquired pneumonia. It used logical decision making and thinking to learn how COVID - 19 symptoms may differ from standard pneumonia and this experiment resulted in a 96% accuracy in deducing which CT scans showed COVID -19 and a 95% accuracy for which CT scan showed community acquired pneumonia.

This demonstrated that a deep learning model can accurately detect COVID - 19. This technology could potentially save millions as it can reduce the risk of infection as patients with COVID - 19 can be detected faster and quarantined appropriately, minimising exposure to others.

AI used in detection of Skin Cancers

AI can also be used in surgery. In 2018, a paper published by the Annals of Oncology [5], deep learning was also used in creating a neural network trained specifically on dermoscopic images. 100 cases of skin cancers and benign lesions were used to help teach the machine to learn the differences between different types of skin cancers and how to detect them.

This resulted in a 95% accuracy rate for the neural network whereas the dermatologists diagnosed it with an accuracy of 94%. While the specialists were outperformed in this experiment, the researchers themselves suspect that it may have been due to the artificial conditions of the lab and that in a less artificial environment, the dermatologists and neural networks may have performed on the same level.

As you can suspect, some specialists see the advancements of AI as a threat [6] as its evolution to create a more accurate algorithm may result in improvements of diagnosis compared to ones done by the specialists.

Even Google™ has dabbled with medical AI, with their company DeepMind [7]. The NHS uses DeepMind to identify health threats from data obtained over a smartphone device.

Conclusion

Why am I talking about AI?

Well as you can see from the data above, Machine learning, Deep learning and AI have become of interest in recent years, however, some of the connotations associated with them are negative.

Is AI really dangerous?

AI isn't as dangerous as it may sound, it can improve and benefit our lives in so many ways. Technology is only going to become more advanced as time progresses, so it is important to learn about technology now to gain a deeper understanding of the world we live in.

References

[1] Russell, Stuart J.; Norvig, Peter (2009). Artificial Intelligence: A Modern Approach (3rd ed.). Upper Saddle River, New Jersey: Prentice Hall.

[2] Nautilus, Written by Liquin Luo, Why Is the Human Brain So Efficient? How massive parallelism lifts the brain’s performance above that of AI, published April 12, 2018 [Accessed July 21, 2020]

[3] Venture Beat, Written by John Brandon, Why the iRobot Roomba 980 is a great lesson on the state of AI, published November 3, 2016 [Accessed July 22, 2020]

[4] Li L, Qin L, Xu Z, Yin Y, Wang X, et al. (March 2020). "Artificial Intelligence Distinguishes COVID-19 from Community Acquired Pneumonia on Chest CT". Radiology: 200905

[5] Haenssle, H.A., Fink, C., Toberer, F., Winkler, J., Stolz, W., Deinlein, T., Hofmann-Wellenhof, R., Lallas, A., Emmert, S., Buhl, T. and Zutt, M., 2020. Man against machine reloaded: performance of a market-approved convolutional neural network in classifying a broad spectrum of skin lesions in comparison with 96 dermatologists working under less artificial conditions. Annals of Oncology, 31(1), pp.137-143.

[6]Chockley K, Emanuel E (December 2016). "The End of Radiology? Three Threats to the Future Practice of Radiology". Journal of the American College of Radiology. 13 (12 Pt A): 1415–1420.

[7] BBC News, Written By Chris Baraniuk, "Google gets access to cancer scans", published August 31, 2016 [Accessed July 26, 2020]